Tokenizing Embedding Spaces for Faster, More Relevant Search

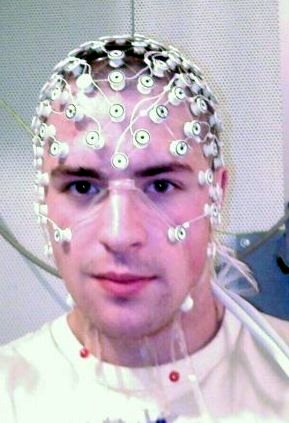

Embedding spaces are quite trendy right now machine learning. With word2vec for example, you can create an embedding for words that is capable of capturing analogy. Given the input "man is to king as woman is to what?", a word2vec embedding can be used to correctly answer "queen". (Remarkable isn't it?) Embeddings like this can be used for a wide variety of different domains. For example, facial photos can be projected into an embedding space and for tasks of facial recognition. However I wonder if embeddings fall short in a domain that I am very near to - search. Consider the facial recognition task: Each face photo is converted into an N-dimensional vector where N is often rather high (hundreds of values). Given a sample photograph of a face, if you want to find all of the photos of that person then you have to search for all the photo vectors near to the sample photo's vector. But, due to the curse of dimensionality, very high dimensional embedding spaces are not amenable to data structure commonly used for spatial search, such as k-d trees.